|

3. |

Cluster Operation |

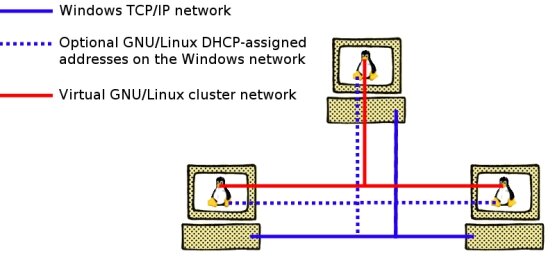

Network TopologyTwo networks are involved in the operation of the Virtual Cluster Toolbox: one connecting the Windows operating systems, and another distinct network for the virtual cluster. Both of these networks use the same hardware (network cards, wires and switches).We assume that the Windows computers that will host the virtual cluster are connected by an IP ethernet network. We refer to this network as the "Windows network." The virtual cluster uses a private class "C" IP network that operates concurrently with the Windows network on the same wire. Each Windows machine may host one member (node) of the virtual cluster. Although they use the same wires, the virtual cluster network is distinct from the Windows network. This means that the virtual cluster nodes cannot directly access the Windows network and vice versa. To facilitate network interaction between the Windows systems and the virtual cluster nodes, each virtual cluster node requests a DHCP-assigned address on the Windows network (in addition to the private network address used in the virtual cluster). These network addresses are not required for operation of the virtual cluster. They are used primarily to provide a graphical web-based interface between each cluster node and it's host Windows system (see Logging in to the VCT graphically.) A schematic illustration of the networks is presented by the following figure.  Figure 3.1: VCT Network Topology The first computer that starts the VCT running acts as the front-end system. The front end:

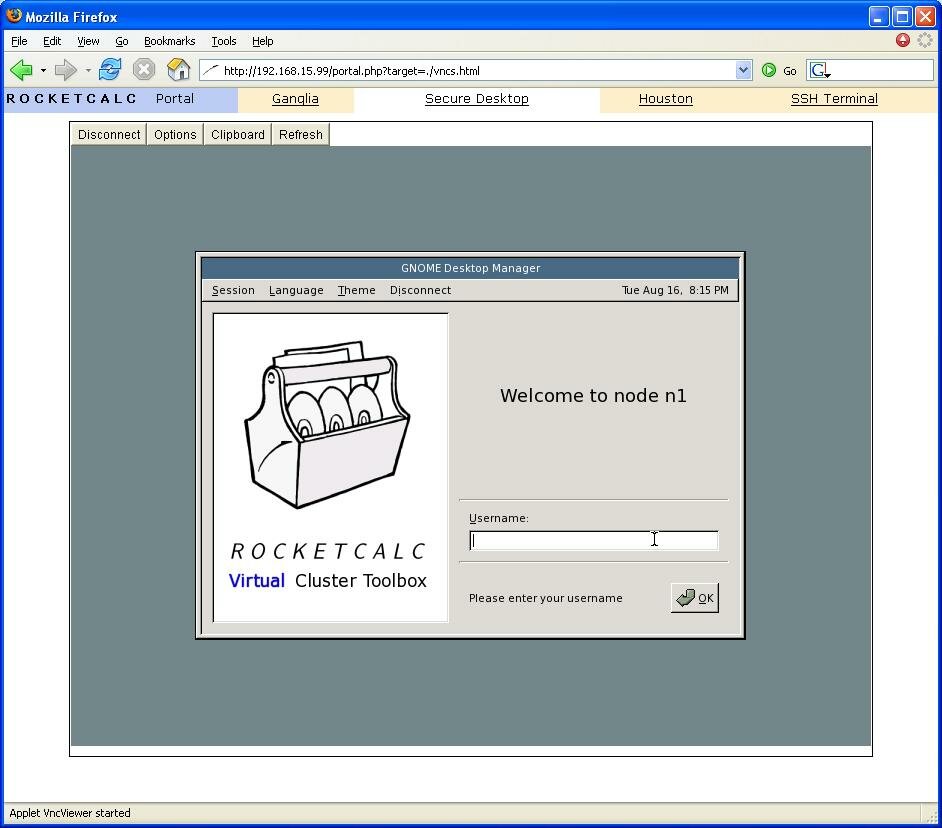

All participating computers are otherwise fully independent with identical copies of the root file system (possibly aside from the user home directories in /home). The normal approach to VCT deployment is to select a specific computer to always act as the front-end node. If changes need to be made to the root file system after installation (e.g., adding new software packages), make them on the front-end node and simply copy the modified root file system to the back-end nodes. Remember, the first computer to start the VCT is the front end and will assign IP addresses and provide the /home directory for all the remaining nodes running the VCT. Starting up the Virtual ClusterSelect a computer to act as the front-end node and run the VCT by double-clicking on the start_vct.vbs script in the coLinux installation directory (C:\Program Files\coLinux by default). A terminal window will display the Linux boot process and a summary screen. Two default users are defined: root and rocketcalc. The default password for both accounts is rocketcalc.Edit the start_vct.vbs script with any text editor to change miscellaneous parameters, including the amount of memory used by the VCT. The default memory allocation is 96 MB. Increase or decrease this value according to the available memory on the Windows computer. Logging in to the VCT graphicallyThe VCT is configured to provide a simple graphical desktop environment served by a VNC server. The graphical environment is accessible from a Java-enabled web browser or a VNC client application. Several free, open-source VNC client applications are available for Windows, including: http://www.tightvnc.com, and http://realvnc.com.Graphical log ins through VNC require a network path between the Windows computer and the VCT client operating system. VCT nodes will attempt to obtain an aliased IP address on the same subnet as the host Windows machine via the DHCP protocol. The start-up summary screen will display all network addresses in use by the virtual cluster node. Issue the command /sbin/ifconfig from a command line prompt to view the configured VCT network interfaces anytime. If the VCT node was able to obtain an IP address on the Windows network, simply point the Windows web browser to that address to view the Rocketcalc Unicluster Web Portal. Select the "Secure Desktop" option for a Java-client secure graphical desktop session (displayed in the figure below). Alternatively, launch a VNC viewer application on the host Windows machine and direct it to the VCT client IP address and port 5901 for direct VNC graphical access.

Cluster technical detailsEach node in the virtual cluster has a virtual network interface that is assigned a MAC address different from the MAC address on the "real" network adapter on the host Windows system.• Operating system overviewThe VCT is powered by the Linux 2.6.10 kernel with the CoLinux patch. The operating system is based on Debian 3.1/testing. The default compiler is GCC 4.02. The kernel was compiled with GCC 3.3, which is also included.• Network initializationThe VCT slightly deviates from standard Debian network initialization in order to support a self-organizing virtual cluster network alongside the host Windows network. Two extra initialization scripts are specified in the /etc/rcS.d directory for this purpose. The scripts are linked to /opt/rocketcalc/etc/init.d/vct_init and /opt/rocketcalc/etc/init.d/vct_external_net_init.The VCT sets up a private class "C" subnet for cluster operation. The first node to start assumes the role of the "front-end" node. The front-end node assigns private cluster I.P. addresses to subsequent nodes and also provides a shared user /home directory and common user identities. The user home directories are shared across the cluster network using the NFS protocol. The front-end node uses a modified DHCP protocol to assign private cluster IP addresses (with a modified version of the udhcpd program). The modified protocol will not interfere with the standard DHCP protocol used by the host Windows network, allowing the two networks to assign addresses independently and without interfering with each other. Each cluster node also attempts to obtain a DHCP lease for an address on the host Windows network. This allows the host Windows systems to communicate over the network with the nodes to support, among other things, graphical log-ins. Network initialization proceeds as follows:

The VCT is also configured to start a number of other services that are not part of most Debian distributions. The are:

Each node is also equipped with the standard Apache web server. The node displays a "Cluster Portal" web interface available to any web-browser on the Windows network (assuming the node can obtain an I.P. address on the host Windows network.) |

|

Figure 3.2: The VCT secure remote desktop login screen.

Figure 3.2: The VCT secure remote desktop login screen.